Worker requires only one argument which is the spark host URL ( spark://host:7077).įine-tune configuration maybe achieved by mounting /spark/conf/nf, /spark/conf/spark-env.sh or by passing SPARK_* environment variables directly. If you wanted to use a different version of Spark & Hadoop, select the. copy the link from one of the mirror site.

Install apache spark standalone install#

Depending on which one you need to start you may pass either master or worker command to this container. In order to install Apache Spark on Linux based Ubuntu, access Apache Spark Download site and go to the Download Apache Spark section and click on the link from point 3, this takes you to the page with mirror URL’s to download. It is a fast unified analytics engine used for big data and machine learning processing. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions. Apache Spark is an open-source distributed general-purpose cluster-computing framework.

Install apache spark standalone how to#

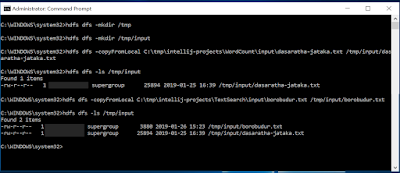

Select Ubuntu then Get and Launch to install the Ubuntu terminal on Windows (if the install hangs, you may. Welcome to our guide on how to install Apache Spark on Ubuntu 22.0420.0418.04. Installing Apache Spark Standalone-Cluster in Windows Sachin Gupta, 1, 15 mins, big data, machine learning, apache, spark, overview, noteables, setup Here I will try to elaborate on simple guide to install Apache Spark on Windows ( Without HDFS ) and link it to local standalong Hadoop Cluster.

Go to Start Control Panel Turn Windows features on or off.

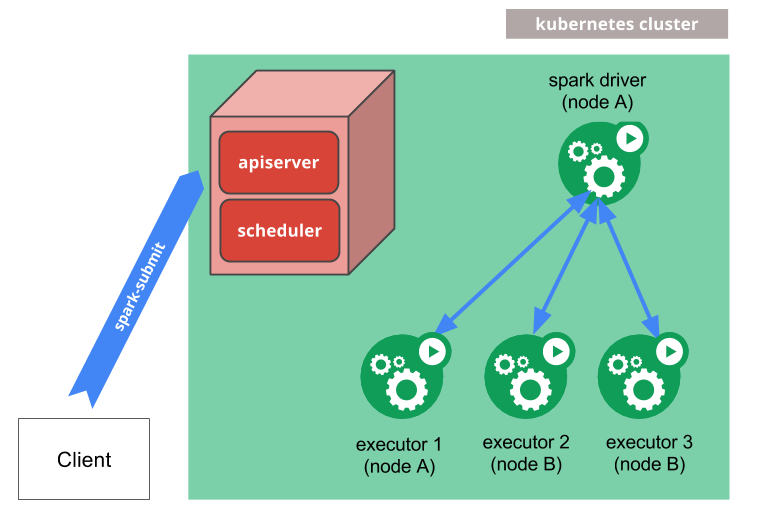

Standalone mode supports to container roles master and worker. Apache Spark Windows Subsystem for Linux (WSL) Install. Note that the default configuration will expose ports 80 correspondingly for the master and the worker containers.

0 kommentar(er)

0 kommentar(er)